Visibility is often discussed as a ranking challenge.

But, before a page can rank, cited, summarized, or retrieved by search and AI systems, it must first be eligible for discovery. That eligibility depends on three foundational layers:

- Can it be stored?

- Can it be accessed efficiently?

- Can it be understood in context?

At Trebletree, we evaluate those layers through a framework we call ICR: Indexability, Crawlability, and Retrievability.

ICR is not a tactical checklist. It is a structural model for measuring modern discoverability across traditional search and AI-mediated retrieval systems.

What Is Indexability?

Indexability answers a simple but critical question:

Can this page be reached – and are we clearly signaling that it should be included in Google’s index?

This is the eligibility layer.

If search engines can’t access the page, or if conflicting signals suggest it shouldn’t be indexed, visibility never gets off the ground.

Indexability includes:

- Status codes and accessibility

- Robots directives and meta instructions

- Canonical signals and duplication management

- Redirect logic and URL consistency

- Clear intent around which URLs represent primary versions

Indexability is about clarity of inclusion.

Are we telling Google, unambiguously, “This page belongs in the index”?

When that clarity breaks down, storage becomes inconsistent. And inconsistent storage produces unstable visibility.

What Is Crawlability?

Crawlability is about how search engine spiders move through your site.

Not just whether they can access a page – but whether they do so efficiently, logically, and within real system constraints.

Search engines operate with finite resources. They prioritize.

Crawlability evaluates:

- Internal linking hierarchy and depth

- Site architecture alignment with business structure

- Faceted navigation and URL expansion

- Orphaned or low-priority pages

- Resource-level constraints that affect processing

In enterprise and multi-location environments, crawl inefficiencies often stem from structure. Location hierarchies flatten. Filters multiply URLs exponentially. Templates introduce unnecessary overhead.

Nothing appears broken.

But crawl paths become diluted.

And diluted crawl paths weaken prioritization.

Crawlability is about making it easy for spiders to understand what matters most.

What Is Retrievability?

Retrievability moves beyond traditional indexing and crawling.

It asks:

Once indexed, can your content be clearly interpreted and surfaced in AI-driven systems?

Retrieval is not ranking.

It is interpretation.

AI systems segment, embed, and assemble information. They rely on entity clarity, explicit relationships, and well-structured content boundaries.

Retrievability evaluates:

- Entity anchoring and disambiguation

- Structured data placement and consistency

- Clear content segmentation

- Explicit relationships between concepts, locations, or services

- Reduced ambiguity in topical intent

Many sites today are indexed and crawled successfully – yet struggle to appear in generative responses or contextual summaries.

The issue is rarely content volume.

It is structural clarity.

Retrievability ensures that your content is not just present – but interpretable.

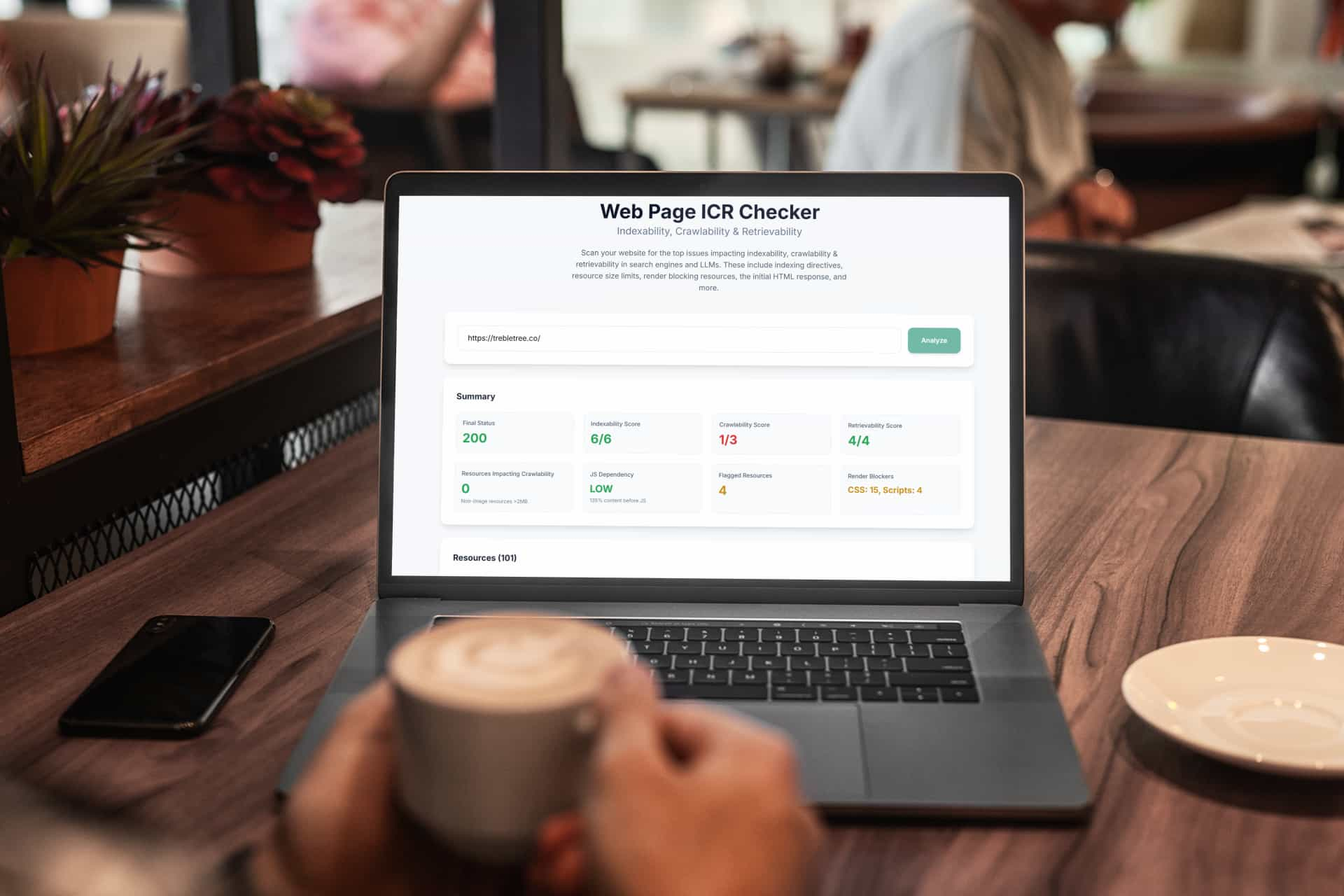

How WHow We Measure ICR: The ICR Checker

A framework only matters if it can be measured.

To operationalize ICR, we built the ICR Checker – a diagnostic layer that evaluates a page across Indexability, Crawlability, and Retrievability signals.

This is V1. It is designed to surface structural risk quickly and clearly – not to replace a full architectural audit.

The checker evaluates:

- Index eligibility signals and directive alignment

- Per-resource processing constraints

- Signal placement within the HTML response

- Structured data positioning

- Foundational retrieval indicators tied to entity clarity and segmentation

On the crawlability layer specifically, this version scratches the surface. It highlights resource-level constraints and signal prioritization, but it does not yet model full internal link graphs or large-scale crawl path dynamics.

That depth still requires architectural analysis.

We’re actively expanding the checker to go deeper into what it truly takes to be:

- Reliably indexed

- Efficiently crawled

- Clearly retrieved

The goal is not to produce another audit tool.

It is to make discoverability measurable – and to expose which layer is constraining visibility before performance metrics decline.

You can explore the ICR Checker here:

https://icr.trebletree.co/

Discoverability Is Structural

Search environments will continue to evolve. Interfaces will change. Constraints will tighten. AI retrieval will expand.

What remains consistent is this:

Systems reward clarity.

ICR exists to measure that clarity across storage, processing, and interpretation.

Visibility is not just competitive positioning.

It is a structural property of your digital ecosystem.

And structural properties can be engineered.